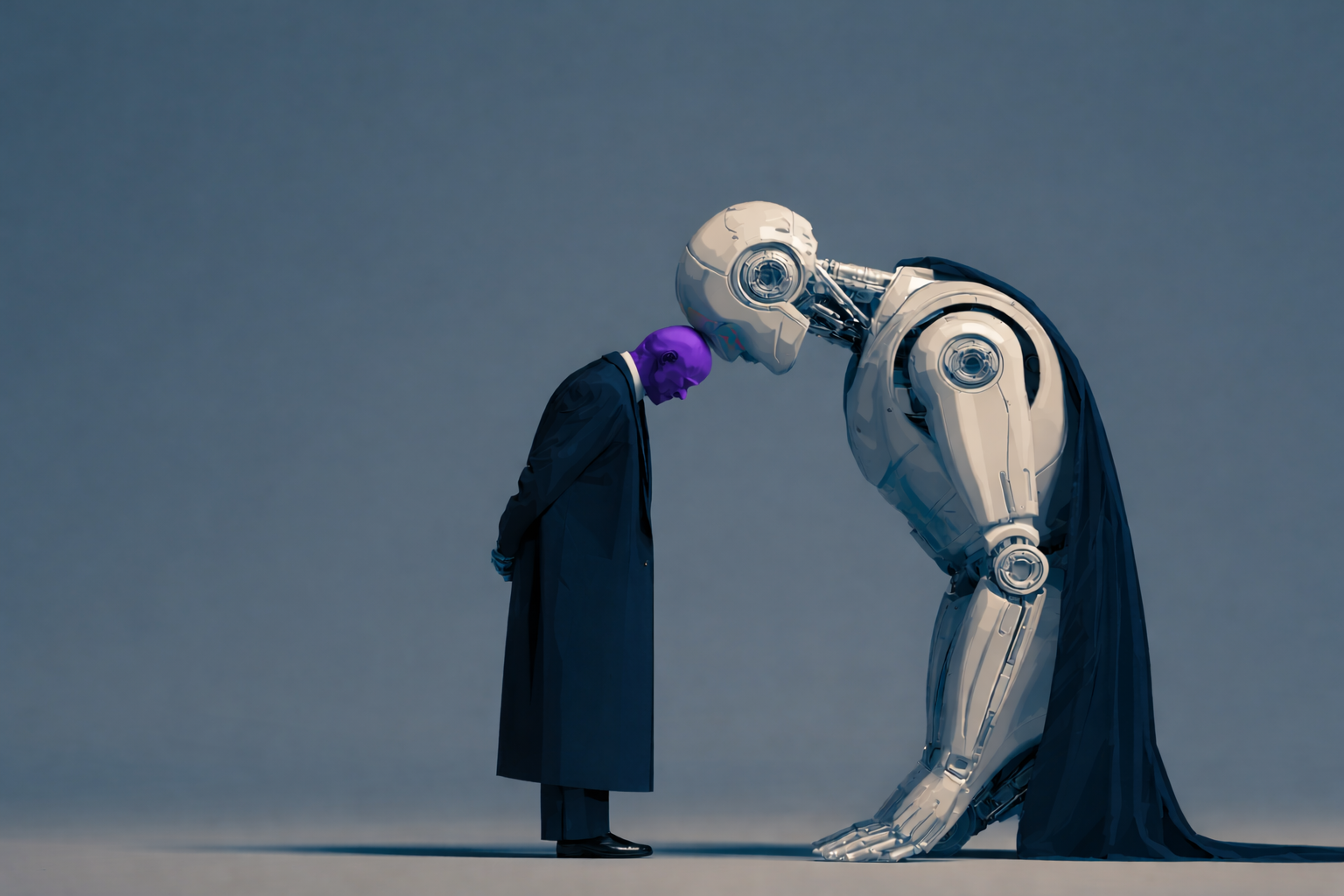

AI Adoption Isn’t a Tooling Problem. It’s a Judgment Problem.

AI value doesn’t come from better tools. It comes from better judgment. Those who manage context, structure work intentionally, iterate intelligently, and calibrate trust reshape workflows. The rest install software and call it transformation.

Most conversations about AI adoption still orbit tools.

Which model.

Which interface.

Which vendor.

Which feature set.

That framing assumes progress comes from improving access to capability.

In practice, access has rarely been the constraint.

Across organizations experimenting with AI-assisted work, a different pattern consistently emerges.

The teams realizing measurable impact are not the ones with the newest tools. They are the ones developing the judgment and management capabilities that reshape how work flows through the value stream.

These are not technical skills.

They are operational capabilities.

And they reorganize production systems.

To be clear: tooling matters. Better models, better interfaces, better integrations — these create real leverage. But they create leverage for people who already know how to direct it. Without the underlying judgment capabilities, better tools just accelerate the same shallow patterns at higher speed.

Four capabilities consistently sit at the center:

- Context Management

- Problem Decomposition

- Iterative Refinement

- Trust Calibration

Context Management

The capability

The ability to shape execution signal before work begins.

It governs:

- what information enters the system

- how constraints are defined

- how intent is structured

- how ambiguity is bounded

This is not prompt technique.

It is information architecture.

AI operates inside the context envelope provided. Poor context amplifies noise. Strong context amplifies leverage. This capability forces organizations to formalize knowledge assembly and convert informal expertise into structured execution input.

Example: Legal Contract Review

Low capability approach

A lawyer pastes a contract into an AI tool and asks:

“Summarize risks.”

Output is generic and inconsistent. Trust erodes.

High capability approach

Context includes:

- jurisdiction constraints

- risk definitions

- client exposure thresholds

- comparable precedent clauses

The output becomes actionable and reliable.

The difference is not tooling.

It is context architecture.

Example: Engineering Incident Analysis

Low context

“Analyze this log.”

Structured context

- system topology

- recent deploy changes

- known failure patterns

- environment metadata

Now the system produces signal rather than guesswork.

Context reshapes work initiation.

Problem Decomposition

The capability

The ability to partition work into routable execution units.

It governs:

- task boundaries

- delegation routing

- orchestration structure

- parallel execution potential

AI struggles against monolithic problem surfaces.

It excels on defined partitions.

This capability redefines the atomic unit of work inside the organization.

Example: Marketing Campaign Generation

Monolithic task

“Create campaign strategy.”

Output is shallow.

Decomposed execution

- Audience segmentation

- Value prop exploration

- Channel message variants

- Performance hypothesis generation

Each segment becomes AI-assisted and composable.

Work structure changes.

Output quality changes.

Velocity changes.

Example: Software Feature Development

Undecomposed

“Build recommendation engine”

Decomposed

- data assumptions

- ranking logic candidates

- edge case modeling

- evaluation criteria

AI becomes infrastructure rather than a suggestion engine.

Decomposition reshapes execution topology.

This isn’t managerial judgment, it’s engineering cognition: understanding the work, breaking it apart, and accelerating it through the system with the aid of AI.

Iterative Refinement

The capability

The ability to drive convergence through structured feedback cycles.

It governs:

- output shaping

- error discovery timing

- learning incorporation

- convergence velocity

Traditional workflows assume linear progression: Draft → Review → Final

AI workflows become convergent: Generate → Evaluate → Adjust → Repeat

Example: Proposal Development

Linear mindset

Generate draft

Edit manually

Submit

Refinement mindset

- Generate options

- Evaluate positioning strength

- Reframe narrative

- Re-run synthesis

- Stress test assumptions

Quality emerges through convergence.

This compresses feedback loops and reduces downstream rework.

Example: Product Ideation

AI produces mediocre concept → abandoned

vs

Concept generated → constraint added → regenerated → tested → evolved

Iteration turns noise into value.

Trust Calibration

Including Frontier Recognition

The capability

The ability to allocate verification effort proportionally to risk and capability boundaries.

It governs:

- validation depth

- oversight placement

- risk exposure

- acceptance thresholds

Frontier recognition lives inside this capability.

Understanding where AI performs reliably informs where trust should be allocated.

Example: Financial Forecasting

Under-calibrated trust

AI output accepted wholesale

Leads to flawed planning

Over-calibrated trust

Everything manually redone

No value gained

Calibrated

- scenario generation trusted

- numeric validation checked

- decision framing reviewed

Trust becomes resource allocation.

Example: Clinical Documentation

AI drafts summary:

- Language trusted

- Diagnostic inference verified

- Compliance elements checked

Frontier awareness informs validation strategy.

This enables scale without risk explosion.

Others have observed this gap. Nate B. Jones frames it well - the distance between exposure and impact is filled by judgment and management capability, not better tooling. The question is what to do about it.

The Missing Middle

I watched a team with $200K in AI infrastructure produce worse outcomes than a solo practitioner with a $20/month subscription. The difference was not budget. It was judgment.

One consistent reality across organizations is uneven capability distribution.

A small minority operate fluidly across these capabilities. For them, AI behaves like high-performance flight gear. It expands reach, speed, and maneuverability dramatically.

Most practitioners do not yet possess these capabilities. Tool access does not provide them by default. Without decomposition instincts, iteration discipline, or calibrated trust, AI interaction feels unreliable or inefficient.

The result is predictable:

Experimentation → Frustration → Disengagement

This gap is often misdiagnosed as a tooling problem.

It is a capability distribution problem.

This gap sits squarely in the missing middle that Vibe-to-Value™ is designed to address.

Vibe-to-Value is an operating methodology we are developing at 8th Light to guide how organizations move from intent to reliable impact when working with AI systems. It frames execution as movement through three interacting loops:

- Vibe — shaping intent, assembling context, and defining the signal that frames work

- Forge — structuring execution, decomposing problems, and iterating toward convergence

- Mature — calibrating trust, validating outcomes, and integrating results into governed workflows

A small fraction of practitioners move fluidly across these loops. They shape context deliberately, partition work naturally, iterate with purpose, and apply calibrated trust.

Most remain focused on interacting with tools rather than reshaping how work flows through these stages.

That is the missing middle between exposure and operational fluency.

Organizations that succeed scaffold capability development through:

- workflow guardrails

- frontier mapping

- shared learning loops

- platform mediation

- governance structures

This is where most AI transformation programs stall. They invest in enablement through training sessions, tool rollouts, and prompt libraries, then wonder why adoption plateaus at experimentation. The missing middle is not a knowledge gap that closes with a workshop. It is an operational capability developed through structured practice on real work, with feedback loops that reveal where judgment is weak and where it is strong.

Organizations pulling ahead treat this as a capability development challenge rather than a change management exercise.

Closing this gap is not about expanding tool catalogs.

It is about cultivating judgment capability.

Judgment and Management vs Tooling Skills

Tooling skills determine how interfaces are operated.

Judgment capabilities determine:

- what work should be done

- how work should be structured

- where execution occurs

- how outcomes are validated

- how risk is bounded

Tools accelerate execution speed.

Judgment reshapes execution structure.

This maps directly to the AI Decision Loop framing. Across thousands of real-world AI interactions, the pattern was consistent: value never emerged from generation itself. It emerged from the surrounding mechanics — context framing, judgment application, validation, and iterative learning incorporation.

Capabilities govern the loop.

Tools participate within it.

Closing

The next phase of AI adoption will not be defined by model advancement alone. It will be defined by how effectively organizations cultivate the judgment capabilities required to manage probabilistic collaborators and restructure value production around them.

Investing in tools is easy.

Developing judgment capability is harder.

That is exactly why durable advantage accumulates there.